Drivers abuse the Autopilot system for Tesla

- yassine zeddou

- Oct 6, 2024

- 2 min read

Updated: Dec 12, 2024

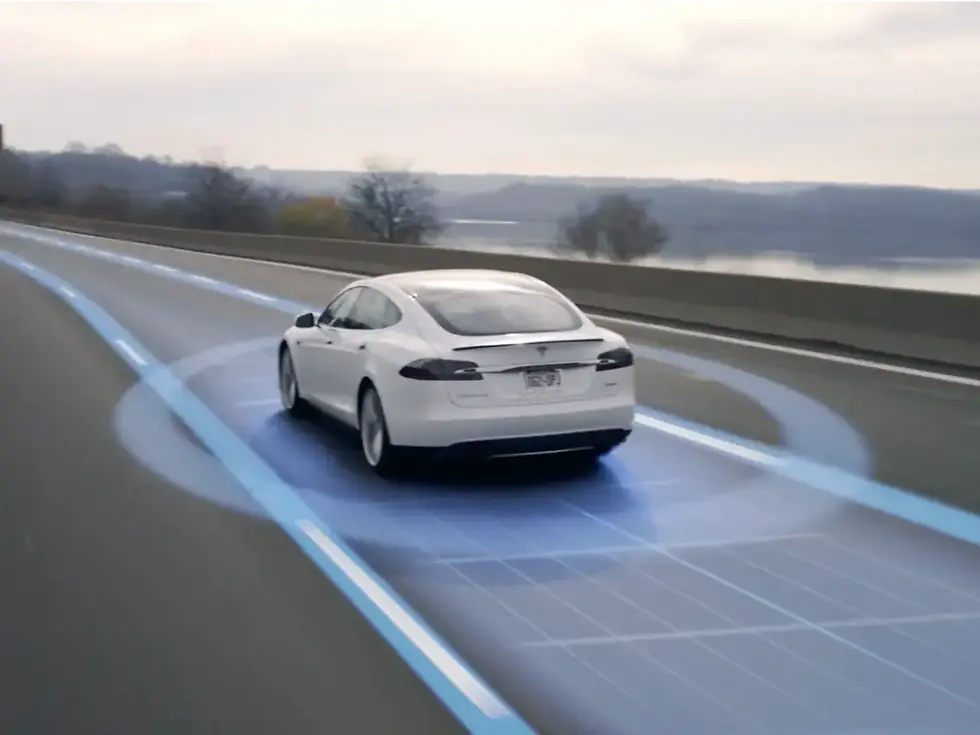

Tesla's autopilot system is designed to improve driving safety and comfort by providing semi-autonomous features. Some drivers are exploiting Tesla's autopilot system by relying on it beyond its intended capacity, it leads to safety concerns and incidents. In this article we will see how Drivers abuse the Autopilot system for Tesla and consider the risks associated with such abuse.

Driver assistance systems, such as Tesla's Autopilot, are designed to reduce the frequency of accidents, but drivers are likely to be distracted as they get used to it, according to a new study released Tuesday by the Highway Safety Insurance Institute (IIHS).

The autopilot was used in conjunction with Volvo's pilot assist system in two separate studies at IIHS and AgeLab at the Massachusetts Institute of Technology. Both studies showed that drivers tended to show distracting behavior while meeting the minimum attention requirements of these systems.

In one study researchers analyzed how the driving behavior of 29 volunteers equipped with a 90 Volvo S2017 with pilot assist changed over four weeks. The researchers focused on how volunteers were more likely to show non-driving behavior when using pilot assist on motorways compared to driving without assistance on motorways.

According to the study, the likelihood that drivers "checked their phones, ate sandwiches, or performed other visual manual activities" was much higher than if they were driving without outside help. This trend has generally intensified over time as drivers get used to the system, but both studies have shown that some drivers are distracted from the start.

The second study looked at 14 volunteers driving a 2020 Tesla model 3 on autopilot for 1 month. For the study, the researchers selected people who had never used an autopilot or equivalent system before, and found that the driver had issued a caution warning for the system.

The researchers found that newcomers to autopilot "quickly mastered the time interval of alert functions, which prevented alerts such as emergency braking and system lockouts from escalating into more serious interventions."

"In both studies, drivers adapted their behavior to distracting activities," IIHS President David Harvey said in a statement. "This shows why semi-automation systems need more robust protection measures to prevent misuse.“

IIHS explained earlier this year that assisted driving systems do not improve safety, based on a separate data set, and advocate for greater monitoring of car safety to prevent adverse effects on safety. In 2024/3, the company completed testing of 9 driver assistance systems from 14 brands and found that most of them were too easy to abuse. In particular, we found that autopilot mislead drivers into thinking they are more powerful than they really are.

The shortcomings of autopilot have attracted the attention of US security agencies. In the 2023 recall, Tesla limited the operation of its fully autonomous beta system.This is described by regulators as a "disproportionate risk to the safety of the car." Tesla continues to use the misleading designation "fully autonomous driving", although the system does not provide such functionality.

conclusion

Misuse of Tesla's autopilot system undermines its purpose and poses serious safety risks to drivers and the public to ensure your benefits responsible use of technology is essential. The study points out that stricter protective measures are needed to prevent abuse.

Comments